Ubuntu服务器安装HadoopHBase Hive.docx

《Ubuntu服务器安装HadoopHBase Hive.docx》由会员分享,可在线阅读,更多相关《Ubuntu服务器安装HadoopHBase Hive.docx(14页珍藏版)》请在冰豆网上搜索。

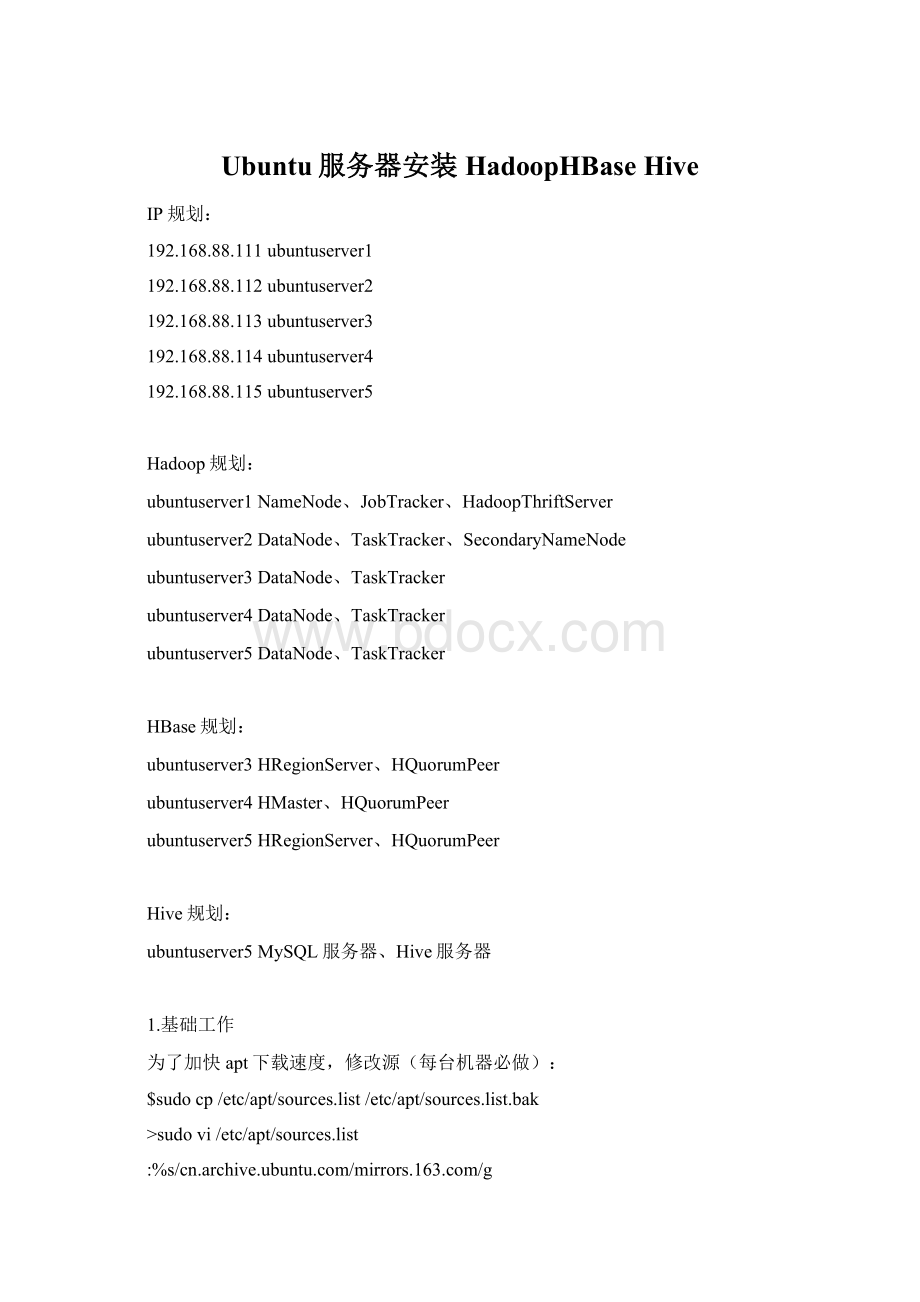

Ubuntu服务器安装HadoopHBaseHive

IP规划:

192.168.88.111ubuntuserver1

192.168.88.112ubuntuserver2

192.168.88.113ubuntuserver3

192.168.88.114ubuntuserver4

192.168.88.115ubuntuserver5

Hadoop规划:

ubuntuserver1NameNode、JobTracker、HadoopThriftServer

ubuntuserver2DataNode、TaskTracker、SecondaryNameNode

ubuntuserver3DataNode、TaskTracker

ubuntuserver4DataNode、TaskTracker

ubuntuserver5DataNode、TaskTracker

HBase规划:

ubuntuserver3HRegionServer、HQuorumPeer

ubuntuserver4HMaster、HQuorumPeer

ubuntuserver5HRegionServer、HQuorumPeer

Hive规划:

ubuntuserver5MySQL服务器、Hive服务器

1.基础工作

为了加快apt下载速度,修改源(每台机器必做):

$sudocp/etc/apt/sources.list/etc/apt/sources.list.bak

>sudovi/etc/apt/sources.list

:

%s/

:

wq

>sudoapt-getupdate

>sudoapt-getupgrade

修改机器名(每台机器必做):

>sudovi/etc/hostname

修改IP(每台机器必做):

>sudovi/etc/network/interfaces

>sudo/etc/init.d/networkingrestart

修改Hosts(每台机器必做)

>sudovi/etc/hosts

127.0.0.1localhost

192.168.88.111ubuntuserver1

192.168.88.112ubuntuserver2

192.168.88.113ubuntuserver3

192.168.88.114ubuntuserver4

192.168.88.115ubuntuserver5

安装OpenJDK(每台机器必做):

>sudoapt-getinstallopenjdk-6-jdk

安装OpenSSH(每台机器必做):

>sudoapt-getinstallopenssh-server

安装编译相关支持包(每台机器必做):

>sudoapt-getinstallantlibboost-devlibboost-test-devlibboost-program-options-devlibevent-devautomakelibtoolflexbisonpkg-configg++libssl-dev

ubuntuserver1、2、3、4、5机器创建hadoop用户组、用户及程序目录并授权:

>sudoaddgrouphadoop

>sudoadduser-ingrouphadoophadoop

>sudomkdir/opt/hadoop

>sudomkdir/opt/hadoopdata

>sudochown-Rhadoop:

hadoop/opt/hadoop

>sudochown-Rhadoop:

hadoop/opt/hadoopdata

ubuntuserver3、4、5机器创建hbase程序目录并授权:

>sudomkdir/opt/hbase

>sudochown-Rhadoop:

hadoop/opt/hbase

ubuntuserver5机器创建hive程序目录并授权:

>sudomkdir/opt/hive

>sudochown-Rhadoop:

hadoop/opt/hive

ubuntuserver1、2、3、4、5机器建立hadoop用户ssh无密码登录:

>suhadoop

>ssh-keygen-trsa-P""

>cd~/.ssh

>catid_rsa.pub>>authorized_keys

>sshlocalhost

>yes

>exit

>exit

完成hadoop用户ssh无密码登录配置后,需从ubuntuserver1以hadoop用户ssh依次登录ubuntuserver2、3、4、5,确认登录成功(首次登录需要确认)。

2.安装(安装配置只需在一台机器上操作,完成后用SCP指令复制到其他机器即可,以下安装配置均在ubuntuserver3上进行)

切换到hadoop用户:

>suhadoop

上传或下载hadoop、hbase安装包到/home/hadoop:

解压(需保证在各自程序根目录,即进入程序目录后不用再次进入下一层目录):

>tar-zxf/home/ubuntu/hadoop-1.1.1.tar.gz-C/opt/hadoop

>tar-zxf/home/ubuntu/hbase-0.94.4-security.tar.gz-C/opt/hbase

3.配置Hadoop

编译hadoop:

>cd/opt/hadoop

>antcompile

删除hadoop/build目录:

>rm-r/opt/hadoop/build

修改start_thrift_server.sh权限:

>chmod775/opt/hadoop/src/contrib/thriftfs/scripts/start_thrift_server.sh

配置HadoopThriftServer:

>vi/opt/hadoop/src/contrib/thriftfs/scripts/start_thrift_server.sh

TOP=/opt/hadoop

CLASSPATH=$CLASSPATH:

$TOP/build/contrib/thriftfs/classes/:

$TOP/build/classes/:

$TOP/conf/

配置hadoop-env.sh(找到#exportJAVA_HOME=,去掉#,然后加上本机jdk的路径,32\64位系统路径有别,请查看真实路径):

>vi/opt/hadoop/conf/hadoop-env.sh

exportJAVA_HOME=/usr/lib/jvm/java-6-openjdk-i386

配置core-site.xml:

>vi/opt/hadoop/conf/core-site.xml

fs.default.name

hdfs:

//ubuntuserver1:

9000

hadoop.tmp.dir

/home/hadoop/tmp

配置mapred-site.xml:

>vi/opt/hadoop/conf/core-site.xml

mapred.job.tracker

ubuntuserver1:

9001

mapred.local.dir

/opt/hadoopdata/mapred/local

true

mapred.system.dir

/opt/hadoopdata/mapred/system

true

配置hdfs-site.xml:

>vi/opt/hadoop/conf/hdfs-site.xml

dfs.name.dir

/opt/hadoopdata/name

dfs.data.dir

/opt/hadoopdata/data

dfs.replication

3

fs.checkpoint.dir

/opt/hadoopdata/secondary

fs.checkpoint.period

1800

fs.checkpoint.size

33554432

fs.trash.interval

1440

dfs.datanode.du.reserved

1073741824

dfs.block.size

134217728

dfs.permissions

false

配置masters(添加SecondaryNameNode主机):

>vi/opt/hadoop/conf/masters

ubuntuserver2

配置slaves(添加slave主机,一行一个):

>vi/opt/hadoop/conf/slaves

ubuntuserver2

ubuntuserver3

ubuntuserver4

ubuntuserver5

4.配置HBase

配置hbase-env.sh:

>vi/opt/hbase/conf/hbase-env.sh

#本机jdk的路径,32\64位系统路径有别

exportJAVA_HOME=/usr/lib/jvm/java-6-openjdk-i386

#运动自带的zookeeper

exportHBASE_MANAGES_ZK=true

exportHADOOP_HOME=/opt/hadoop

exportHBASE_HOME=/opt/hbase

配置hbase-site.xml(hbase.zookeeper.quorum必须是奇数个):

>vi/opt/hbase/conf/hbase-site.xml

hbase.rootdir

hdfs:

//ubuntuserver1:

9000/hbase

hbase.cluster.distributed

true

hbase.master

ubuntuserver4:

6000

hbase.zookeeper.quorum

ubuntuserver3,ubuntuserver4,ubuntuserver5

配置regionservers:

>vi/opt/hbase/conf/regionservers

ubuntuserver3

ubuntuserver5

5.SCP复制

将ubuntuserver3服务器/opt/hadoop目录复制到ubuntuserver1、2、4、5服务器相同目录:

scp-r/opt/hadoophadoop:

hadoop@ubuntuserver1:

/opt

scp-r/opt/hadoophadoop:

hadoop@ubuntuserver2:

/opt

scp-r/opt/hadoophadoop:

hadoop@ubuntuserver4:

/opt

scp-r/opt/hadoophadoop:

hadoop@ubuntuserver5:

/opt

将ubuntuserver3服务器/opt/hbase目录复制到ubuntuserver3、5服务器相同目录:

scp-r/opt/hbasehadoop:

hadoop@ubuntuserver3:

/opt

scp-r/opt/hbasehadoop:

hadoop@ubuntuserver5:

/opt

6.格式化namenode,hadoop用户登录到ubuntuserver1运行如下指令:

>/opt/hadoop/bin/hadoopnamenode-format

7.启停服务器集群

启动Hadoop集群,hadoop用户登录到ubuntuserver1运行如下指令:

>/opt/hadoop/bin/start-all.sh

运行jps指令,查看各机器进程,若与Hadoop规划一节中描述的进程一致,则成功。

停止Hadoop集群,hadoop用户登录到ubuntuserver1运行如下指令:

>/opt/hadoop/bin/stop-all.sh

停止Hadoop集群时,应先停止HBase集群。

启动HadoopThriftServer(指定了9606端口,不指定则使用随机端口),hadoop用户登录到ubuntuserver1运行如下指令:

/opt/hadoop/src/contrib/thriftfs/scripts/start_thrift_server.sh9606&

启动HBase集群,hbase用户登录到ubuntuserver4运行如下指令:

>/opt/hbase/bin/start-hbase.sh

启动HBase集群时,应先启动Hadoop集群。

运行jps指令,查看各机器进程,若与HBase规划一节中描述的进程一致,则成功。

停止HBase集群,hbase用户登录到ubuntuserver4运行如下指令:

>/opt/hbase/bin/stop-hbase.sh

启动HBaseThriftServer(默认端口:

9090),hbase用户登录到ubuntuserver4运行如下指令:

>/opt/hbase/bin/hbasethriftstart&

进入控制台:

>/opt/hbase/bin/hbaseshell

hbase>list

HBaseshell语法(http:

//wiki.apache.org/hadoop/Hbase/Shell):

建表:

create'Article',{NAME=>'Data',VERSIONS=>1}

列出表:

list

8.Hive安装配置(ubuntuserver5):

安装MySQL服务器

>sudoapt-getinstallmysql-server

配置MySQL:

>sudovi/etc/mysql/f

#注释bind-address

#bind-address=127.0.0.1

>sudoservicemysqlrestart

创建Hive使用的账号(用户名:

hive,密码:

hivepwd,允许从任意机器远程登录):

>mysql-uroot-p

mysql>insertintomysql.user(Host,User,Password)values('%','hive',password('hivepwd'));

mysql>grantallprivilegeson*.*to'hive'@'%'identifiedby'hivepwd'withgrantoption;

mysql>flushprivileges;

mysql>exit;

切换到hadoop用户:

>su-hadoop

上传或下载hive安装包到/home/hadoop:

解压:

>tar-zxf/home/ubuntu/hive-0.10.0-bin.tar.gz-C/opt/hive

下载MySQLConnector/J5.1.24到/home/hadoop,解压后将mysql-connector-java-5.1.24-bin.jar复制到/opt/hive/lib:

>wgethttp:

//mysql.ntu.edu.tw/Downloads/Connector-J/mysql-connector-java-5.1.24.tar.gz

>tar-zxfmysql-connector-java-5.1.24.tar.gz

>cpmysql-connector-java-5.1.24/mysql-connector-java-5.1.24-bin.jar/opt/hive/lib

删除/opt/hive/lib下的旧版本hbase-xxxxx.jar、hbase-xxxxx-tests.jar、zookeeper-xxxx.jar

>rm/opt/hive/lib/hbase-0.92.0.jar

>rm/opt/hive/lib/hbase-0.92.0-tests.jar

>rm/opt/hive/lib/zookeeper-3.4.3.jar

复制/opt/hbase下的hbase-xxxxx.jar、hbase-xxxxx-tests.jar,/opt/hbase/lib下的zookeeper-xxxx.jar、protobuf-java-xxxxx.jar复制到/opt/hive/lib

>cp/opt/hbase/hbase-0.94.4-security.jar/opt/hive/lib

>cp/opt/hbase/hbase-0.94.4-security-tests.jar/opt/hive/lib

>cp/opt/hbase/lib/zookeeper-3.4.5.jar/opt/hive/lib

>cp/opt/hbase/lib/protobuf-java-2.4.0a.jar/opt/hive/lib/

配置hive-env.sh

>cp/opt/hive/conf/hive-env.sh.template/opt/hive/conf/hive-env.sh

>vi/opt/hive/conf/hive-env.sh

exportJAVA_HOME=/usr/lib/jvm/java-6-openjdk-i386

exportHADOOP_HEAPSIZE=64

exportHADOOP_HOME=/opt/hadoop

exportHBASE_HOME=/opt/hbase

exportHIVE_HOME=/opt/hive

配置hive-site.xml,添加或修改以下节点(注意:

原始文档中有的description标记没有关闭,请根据错误信息等位到错误行进行校正):

>cp/opt/hive/conf/hive-default.xml.template/opt/hive/conf/hive-site.xml

>vi/opt/hive/conf/hive-site.xml

hbase.zookeeper.quorum

ubuntuserver2,ubuntuserver3,ubuntuserver4

hive.metastore.local

true

javax.jdo.option.ConnectionURL

jdbc:

mysql:

//localhost:

3306/HiveDB?

createDatabaseIfNotExist=true

javax.jdo.option.ConnectionDriverName

com.mysql.jdbc.Driver

javax.jdo.option.ConnectionUserName

hive

javax.jdo.option.ConnectionPassword

hivepwd

配置hive-log4j.properties:

针对0.10.0版本的特别处理。

cp/opt/hive/con