svm.docx

《svm.docx》由会员分享,可在线阅读,更多相关《svm.docx(29页珍藏版)》请在冰豆网上搜索。

svm

SeparableData

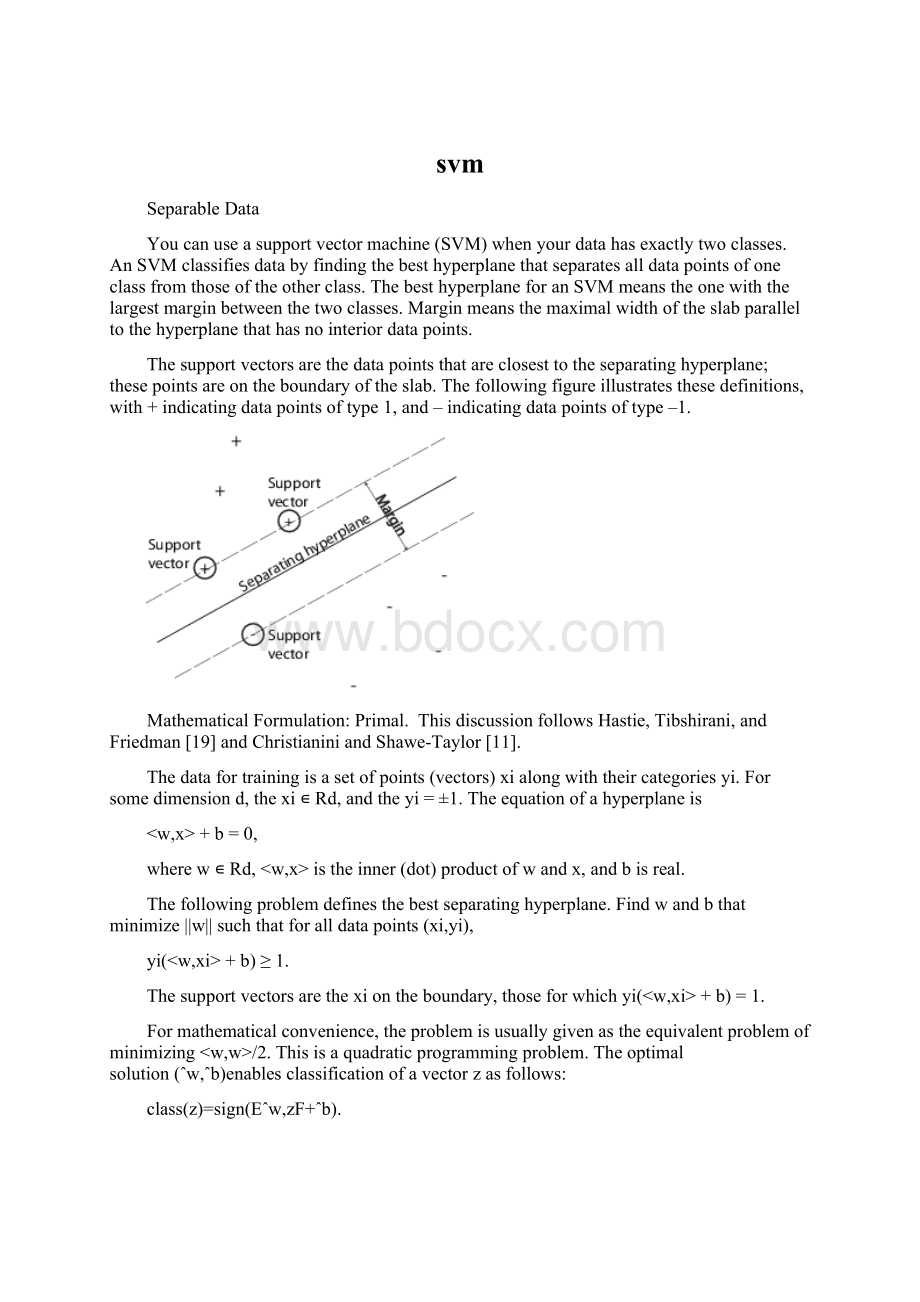

Youcanuseasupportvectormachine(SVM)whenyourdatahasexactlytwoclasses.An SVM classifiesdatabyfindingthebesthyperplanethatseparatesalldatapointsofoneclassfromthoseoftheotherclass.The best hyperplaneforan SVM meanstheonewiththelargest margin betweenthetwoclasses.Marginmeansthemaximalwidthoftheslabparalleltothehyperplanethathasnointeriordatapoints.

The supportvectors arethedatapointsthatareclosesttotheseparatinghyperplane;thesepointsareontheboundaryoftheslab.Thefollowingfigureillustratesthesedefinitions,with+indicatingdatapointsoftype1,and–indicatingdatapointsoftype–1.

MathematicalFormulation:

Primal. ThisdiscussionfollowsHastie,Tibshirani,andFriedman [19] andChristianiniandShawe-Taylor [11].

Thedatafortrainingisasetofpoints(vectors) xi alongwiththeircategories yi.Forsomedimension d,the xi ∊ Rd,andthe yi = ±1.Theequationofahyperplaneis

+ b =0,

where w ∊ Rd,istheinner(dot)productof w and x,and b isreal.

Thefollowingproblemdefinesthe best separatinghyperplane.Find w and b thatminimize||w||suchthatforalldatapoints(xi,yi),

yi(+ b)≥1.

Thesupportvectorsarethe xi ontheboundary,thoseforwhich yi( + b) = 1.

Formathematicalconvenience,theproblemisusuallygivenastheequivalentproblemofminimizing/2.Thisisaquadraticprogrammingproblem.Theoptimalsolution (ˆw,ˆb)enablesclassificationofavector z asfollows:

class(z)=sign(Eˆw,zF+ˆb).

MathematicalFormulation:

Dual. Itiscomputationallysimplertosolvethedualquadraticprogrammingproblem.Toobtainthedual,takepositiveLagrangemultipliers αi multipliedbyeachconstraint,andsubtractfromtheobjectivefunction:

LP=12Ew,wF−iαi(yi(Ew,xiF+b)−1),

whereyoulookforastationarypointof LP over w and b.Settingthegradientof LP to0,youget

w0=iαiyixi=iαiyi.

(16-1)

Substitutinginto LP,yougetthedual LD:

LD=iαi−12ijαiαjyiyjExi,xjF,

whichyoumaximizeover αi ≥ 0.Ingeneral,many αi are0atthemaximum.Thenonzero αi inthesolutiontothedualproblemdefinethehyperplane,asseenin Equation 16-1,whichgivesw asthesumof αiyixi.Thedatapoints xi correspondingtononzero αi arethe supportvectors.

Thederivativeof LD withrespecttoanonzero αi is0atanoptimum.Thisgives

yi(+ b)–1=0.

Inparticular,thisgivesthevalueof b atthesolution,bytakingany i withnonzero αi.

Thedualisastandardquadraticprogrammingproblem.Forexample,theOptimizationToolbox™ quadprog solversolvesthistypeofproblem.

NonseparableData

Yourdatamightnotallowforaseparatinghyperplane.Inthatcase, SVM canusea softmargin,meaningahyperplanethatseparatesmany,butnotalldatapoints.

Therearetwostandardformulationsofsoftmargins.Bothinvolveaddingslackvariables si andapenaltyparameter C.

∙The L1-normproblemis:

minw,b,s(12Ew,wF+Cisi)

suchthat

yi(Ew,xiF+b)si≥1−si≥0.

The L1-normreferstousing si asslackvariablesinsteadoftheirsquares.Thethreesolveroptions SMO, ISDA,and L1Qp of fitcsvm minimizethe L1-normproblem.

∙The L2-normproblemis:

minw,b,s(12Ew,wF+Cis2i)

subjecttothesameconstraints.

Intheseformulations,youcanseethatincreasing C placesmoreweightontheslackvariables si,meaningtheoptimizationattemptstomakeastricterseparationbetweenclasses.Equivalently,reducing C towards0makesmisclassificationlessimportant.

MathematicalFormulation:

Dual. Foreasiercalculations,considerthe L1 dualproblemtothissoft-marginformulation.UsingLagrangemultipliers μi,thefunctiontominimizeforthe L1-normproblemis:

LP=12Ew,wF+Cisi−iαi(yi(Ew,xiF+b)−(1−si))−iμisi,

whereyoulookforastationarypointof LP over w, b,andpositive si.Settingthegradientof LP to0,youget

biαiyiαiαi,μi,si=iαiyixi=0=C−μi≥0.

Theseequationsleaddirectlytothedualformulation:

maxαiαi−12ijαiαjyiyjExi,xjF

subjecttotheconstraints

iyiαi=00≤αi≤C.

Thefinalsetofinequalities,0 ≤ αi ≤ C,showswhy C issometimescalleda boxconstraint. C keepstheallowablevaluesoftheLagrangemultipliers αi ina"box",aboundedregion.

Thegradientequationfor b givesthesolution b intermsofthesetofnonzero αi,whichcorrespondtothesupportvectors.

Youcanwriteandsolvethedualofthe L2-normprobleminananalogousmanner.Fordetails,seeChristianiniandShawe-Taylor [11],Chapter6.

fitcsvmImplementation. Bothdualsoft-marginproblemsarequadraticprogrammingproblems.Internally, fitcsvm hasseveraldifferentalgorithmsforsolvingtheproblems.

∙Forone-classorbinaryclassification,ifyoudonotsetafractionofexpectedoutliersinthedata(see OutlierFraction),thenthedefaultsolverisSequentialMinimalOptimization(SMO).SMOminimizestheone-normproblembyaseriesoftwo-pointminimizations.Duringoptimization,SMOrespectsthelinearconstraint iαiyi=0, andexplicitlyincludesthebiasterminthemodel.SMOisrelativelyfast.FormoredetailsonSMO,see [13].

∙Forbinaryclassification,ifyousetafractionofexpectedoutliersinthedata,thenthedefaultsolveristheIterativeSingleDataAlgorithm.LikeSMO,ISDAsolvestheone-normproblem.UnlikeSMO,ISDAminimizesbyaseriesonone-pointminimizations,doesnotrespectthelinearconstraint,anddoesnotexplicitlyincludethebiasterminthemodel.FormoredetailsonISDA,see [22].

∙Forone-classorbinaryclassification,andifyouhaveanOptimizationToolboxlicense,youcanchoosetouse quadprog tosolvetheone-normproblem. quadprog usesagooddealofmemory,butsolvesquadraticprogramstoahighdegreeofprecision.Formoredetails,see QuadraticProgrammingDefinition.

NonlinearTransformationwithKernels

Somebinaryclassificationproblemsdonothaveasimplehyperplaneasausefulseparatingcriterion.Forthoseproblems,thereisavariantofthemathematicalapproachthatretainsnearlyallthesimplicityofan SVM separatinghyperplane.

Thisapproachusestheseresultsfromthetheoryofreproducingkernels:

∙Thereisaclassoffunctions K(x,y)withthefollowingproperty.Thereisalinearspace S andafunction φ mapping x to S suchthat

K(x,y)=<φ(x),φ(y)>.

Thedotproducttakesplaceinthespace S.

∙Thisclassoffunctionsincludes:

oPolynomials:

Forsomepositiveinteger d,

K(x,y)=(1+)d.

oRadialbasisfunction(Gaussian):

Forsomepositivenumber σ,

K(x,y)=exp(–<(x–y),(x – y)>/(2σ2)).

oMultilayerperceptron(neuralnetwork):

Forapositivenumber p1 andanegativenumber p2,

K(x,y)=tanh(p1+ p2).

Note:

∙Noteverysetof p1 and p2 givesavalidreproducingkernel.

∙fitcsvm doesnotsupportthesigmoidkernel.

Themathematicalapproachusingkernelsreliesonthecomputationalmethodofhyperplanes.Allthecalculationsforhyperplaneclassificationusenothingmorethandotproducts.Therefore,nonlinearkernelscanuseidenticalcalculationsandsolutionalgorithms,andobtainclassifiersthatarenonlinear.Theresultingclassifiersarehypersurfacesinsomespace S,butthespace S doesnothavetobeidentifiedorexamined.

UsingSupportVectorMachines

Aswithanysupervisedlearningmodel,youfirsttrainasupportvectormachine,andthencrossvalidatetheclassifier.Usethetrainedmachinetoclassify(predict)newdata.Inaddition,toobtainsatisfactorypredictiveaccuracy,youcanusevarious SVM kernelfunctions,andyoumusttunetheparametersofthekernelfunctions.

∙Trainingan SVM Classifier

∙ClassifyingNewDatawithan SVM Classifier

∙Tuningan SVM Classifier

Trainingan SVM Classifier

Train,andoptionallycrossvalidate,an SVM classifierusing fitcsvm.Themostcommonsyntaxis:

SVMModel=fitcsvm(X,Y,'KernelFunction','rbf','Standardize',true,'ClassNames',{'negClass','posClass'});

Theinputsare:

∙X —Matrixofpredictordata,whereeachrowisoneobservation,andeachcolumnisonepredictor.

∙Y —Arrayofclasslabelswitheachrowcorrespondingtothevalueofthecorrespondingrowin X. Y canbeacharacterarray,categorical,logicalornumericvector,orvectorcellarrayofstrings.Columnvectorwitheachrowcorrespondingtothevalueofthecorrespondingrowin X. Y canbeacategoricalorcharacterarray,logicalornumericvector,orcellarrayofstrings.

∙KernelFunction —Thedefaultvalueis 'linear' fortwo-classlearning,whichseparatesthedatabyahyperplane.Thevalue 'rbf' isthedefaultforone-classlearning,andusesaGaussianradialbasisfunction.Animportantsteptosuccessfullytrainan SVM classifieristochooseanappropriatekernelfunction.

∙Standardize —Flagindicatingwhetherthesoftwareshouldstandardizethepredictorsbeforetrainingtheclassifier.

∙ClassNames —Distinguishesbetweenthenegativeandpositiveclasses,orspecifieswhichclassestoincludeinthedata.Thenegativeclassisthefirstelement(orrowofacharacterarray),e.g., 'negClass',andthepositiveclassisthesecondelement(orrowofacharacterarray),e.g., 'posClass'. ClassNames mustbethesamedatatypeas Y.Itisgoodpracticetospecifytheclassnames,especiallyifyouarecomparingtheperformanceofdifferentclassifiers.

Theresulting,trainedmodel(SVMModel)containstheoptimizedparametersfromthe SVM algorithm,enablingyoutoclassifynewdata.

Formorename-valuepairsyoucanusetocontrolthetraining,seethe fitcsvm referencepage.

ClassifyingNewDatawithan SVM Classifier

Classifynewdatausing predict.Thesyntaxforclassifyingnewdatausingatrained SVM classifier(SVMModel)is:

[label,score]=predict(SVMModel,newX);

Theresultingvector, label,representstheclassificationofeachrowin X. score isan n-by-2matrix