非线性最小二乘法LevenbergMarquardtmethod.docx

《非线性最小二乘法LevenbergMarquardtmethod.docx》由会员分享,可在线阅读,更多相关《非线性最小二乘法LevenbergMarquardtmethod.docx(10页珍藏版)》请在冰豆网上搜索。

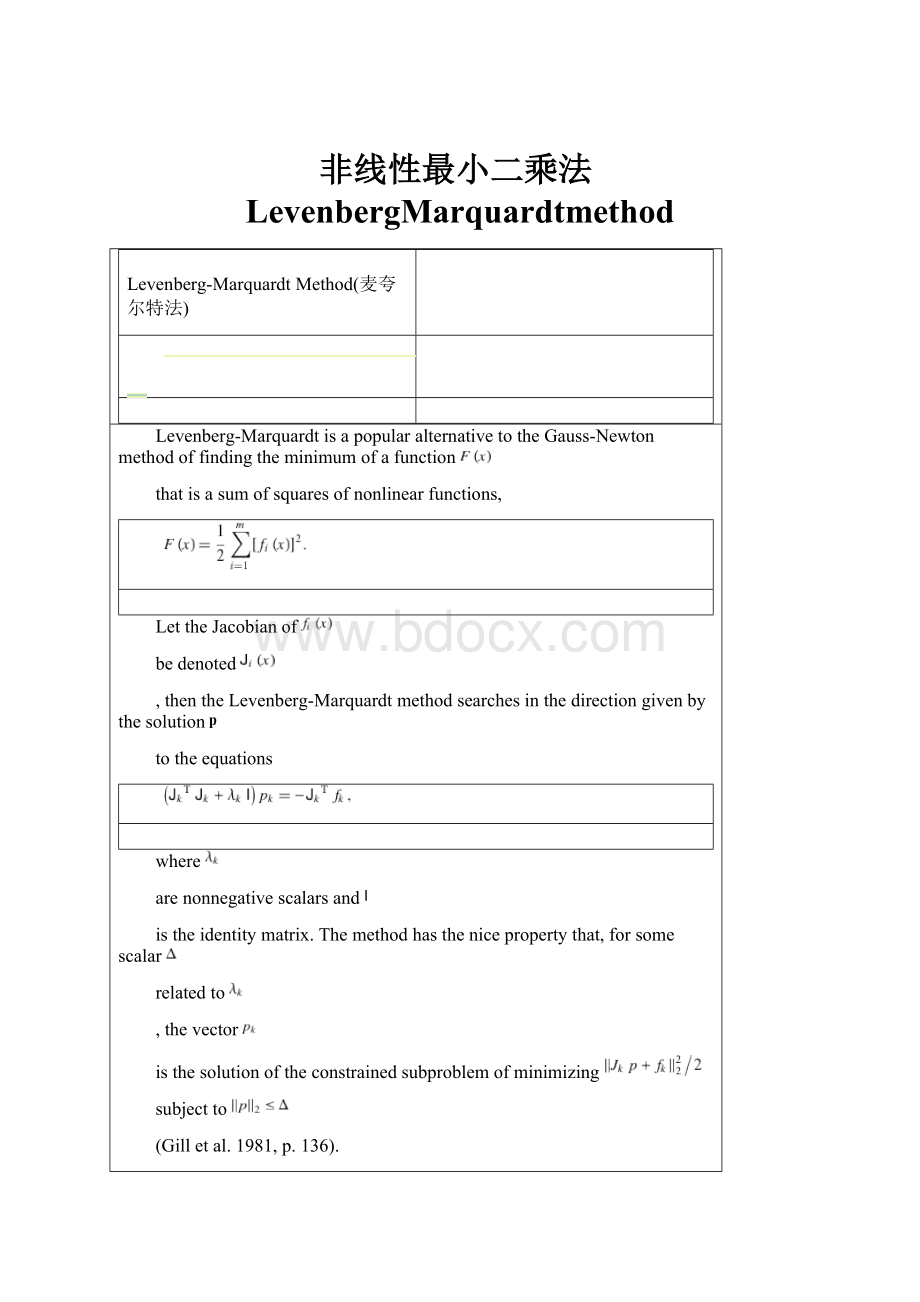

非线性最小二乘法LevenbergMarquardtmethod

Levenberg-MarquardtMethod(麦夸尔特法)

Levenberg-MarquardtisapopularalternativetotheGauss-Newtonmethodoffindingtheminimumofafunction

thatisasumofsquaresofnonlinearfunctions,

LettheJacobianof

bedenoted

thentheLevenberg-Marquardtmethodsearchesinthedirectiongivenbythesolution

totheequations

where

arenonnegativescalarsand

istheidentitymatrix.Themethodhasthenicepropertythat,forsomescalar

relatedto

thevector

isthesolutionoftheconstrainedsubproblemofminimizing

subjectto

(Gilletal.1981,p. 136).

ThemethodisusedbythecommandFindMinimum[f,

x,x0

]whengiventheMethod->LevenbergMarquardtoption.

窗体顶端

SEEALSO:

Minimum,Optimization

窗体底端

REFERENCES:

Bates,D. M.andWatts,D. G.NonlinearRegressionandItsApplications.NewYork:

Wiley,1988.

Gill,P. R.;Murray,W.;andWright,M. H."TheLevenberg-MarquardtMethod."§4.7.3inPracticalOptimization.London:

AcademicPress,pp. 136-137,1981.

Levenberg,K."AMethodfortheSolutionofCertainProblemsinLeastSquares."Quart.Appl.Math.2,164-168,1944.

Marquardt,D."AnAlgorithmforLeast-SquaresEstimationofNonlinearParameters."SIAMJ.Appl.Math.11,431-441,1963.

Levenberg–Marquardtalgorithm

FromWikipedia,thefreeencyclopedia

Jumpto:

navigation,search

Inmathematicsandcomputing,theLevenberg–Marquardtalgorithm(LMA)[1]providesanumericalsolutiontotheproblemofminimizingafunction,generallynonlinear,overaspaceofparametersofthefunction.Theseminimizationproblemsariseespeciallyinleastsquarescurvefittingandnonlinearprogramming.

TheLMAinterpolatesbetweentheGauss–Newtonalgorithm(GNA)andthemethodofgradientdescent.TheLMAismorerobustthantheGNA,whichmeansthatinmanycasesitfindsasolutionevenifitstartsveryfaroffthefinalminimum.Forwell-behavedfunctionsandreasonablestartingparameters,theLMAtendstobeabitslowerthantheGNA.LMAcanalsobeviewedasGauss–Newtonusingatrustregionapproach.

TheLMAisaverypopularcurve-fittingalgorithmusedinmanysoftwareapplicationsforsolvinggenericcurve-fittingproblems.However,theLMAfindsonlyalocalminimum,notaglobalminimum.

Contents

[hide]

∙1CaveatEmptor

∙2Theproblem

∙3Thesolution

o3.1Choiceofdampingparameter

∙4Example

∙5Notes

∙6Seealso

∙7References

∙8Externallinks

o8.1Descriptions

o8.2Implementations

[edit]CaveatEmptor

Oneimportantlimitationthatisveryoftenover-lookedisthatitonlyoptimisesforresidualerrorsinthedependantvariable(y).Ittherebyimplicitlyassumesthatanyerrorsintheindependentvariablearezerooratleastratioofthetwoissosmallastobenegligible.Thisisnotadefect,itisintentional,butitmustbetakenintoaccountwhendecidingwhethertousethistechniquetodoafit.Whilethismaybesuitableincontextofacontrolledexperimenttherearemanysituationswherethisassumptioncannotbemade.Insuchsituationseithernon-leastsquaresmethodsshouldbeusedortheleast-squaresfitshouldbedoneinproportiontotherelativeerrorsinthetwovariables,notsimplythevertical"y"error.Failingtorecognisethiscanleadtoafitwhichissignificantlyincorrectandfundamentallywrong.Itwillusuallyunderestimatetheslope.Thismayormaynotbeobvioustotheeye.

MicroSoftExcel'schartoffersatrendfitthathasthislimitationthatisundocumented.Usersoftenfallintothistrapassumingthefitiscorrectlycalculatedforallsituations.OpenOfficespreadsheetcopiedthisfeatureandpresentsthesameproblem.

[edit]Theproblem

TheprimaryapplicationoftheLevenberg–Marquardtalgorithmisintheleastsquarescurvefittingproblem:

givenasetofmempiricaldatumpairsofindependentanddependentvariables,(xi,yi),optimizetheparametersβofthemodelcurvef(x,β)sothatthesumofthesquaresofthedeviations

becomesminimal.

[edit]Thesolution

Likeothernumericminimizationalgorithms,theLevenberg–Marquardtalgorithmisaniterativeprocedure.Tostartaminimization,theuserhastoprovideaninitialguessfortheparametervector,β.Inmanycases,anuninformedstandardguesslikeβT=(1,1,...,1)willworkfine;inothercases,thealgorithmconvergesonlyiftheinitialguessisalreadysomewhatclosetothefinalsolution.

Ineachiterationstep,theparametervector,β,isreplacedbyanewestimate,β+δ.Todetermineδ,thefunctions

areapproximatedbytheirlinearizations

where

isthegradient(row-vectorinthiscase)offwithrespecttoβ.

Atitsminimum,thesumofsquares,S(β),thegradientofSwithrespecttoδwillbezero.Theabovefirst-orderapproximationof

gives

.

Orinvectornotation,

.

Takingthederivativewithrespecttoδandsettingtheresulttozerogives:

where

istheJacobianmatrixwhoseithrowequalsJi,andwhere

and

arevectorswithithcomponent

andyi,respectively.Thisisasetoflinearequationswhichcanbesolvedforδ.

Levenberg'scontributionistoreplacethisequationbya"dampedversion",

whereIistheidentitymatrix,givingastheincrement,δ,totheestimatedparametervector,β.

The(non-negative)dampingfactor,λ,isadjustedateachiteration.IfreductionofSisrapid,asmallervaluecanbeused,bringingthealgorithmclosertotheGauss–Newtonalgorithm,whereasifaniterationgivesinsufficientreductionintheresidual,λcanbeincreased,givingastepclosertothegradientdescentdirection.NotethatthegradientofSwithrespecttoβequals

.Therefore,forlargevaluesofλ,thestepwillbetakenapproximatelyinthedirectionofthegradient.Ifeitherthelengthofthecalculatedstep,δ,orthereductionofsumofsquaresfromthelatestparametervector,β+δ,fallbelowpredefinedlimits,iterationstopsandthelastparametervector,β,isconsideredtobethesolution.

Levenberg'salgorithmhasthedisadvantagethatifthevalueofdampingfactor,λ,islarge,invertingJTJ + λIisnotusedatall.Marquardtprovidedtheinsightthatwecanscaleeachcomponentofthegradientaccordingtothecurvaturesothatthereislargermovementalongthedirectionswherethegradientissmaller.Thisavoidsslowconvergenceinthedirectionofsmallgradient.Therefore,Marquardtreplacedtheidentitymatrix,I,withthediagonalmatrixconsistingofthediagonalelementsofJTJ,resultingintheLevenberg–Marquardtalgorithm:

.

AsimilardampingfactorappearsinTikhonovregularization,whichisusedtosolvelinearill-posedproblems,aswellasinridgeregression,anestimationtechniqueinstatistics.

[edit]Choiceofdampingparameter

Variousmore-or-lessheuristicargumentshavebeenputforwardforthebestchoiceforthedampingparameterλ.Theoreticalargumentsexistshowingwhysomeofthesechoicesguaranteedlocalconvergenceofthealgorithm;howeverthesechoicescanmaketheglobalconvergenceofthealgorithmsufferfromtheundesirablepropertiesofsteepest-descent,inparticularveryslowconvergenceclosetotheoptimum.

Theabsolutevaluesofanychoicedependsonhowwell-scaledtheinitialproblemis.Marquardtrecommendedstartingwithavalueλ0andafactorν>1.Initiallysettingλ=λ0andcomputingtheresidualsumofsquaresS(β)afteronestepfromthestartingpointwiththedampingfactorofλ=λ0andsecondlywithλ0/ν.Ifbothoftheseareworsethantheinitialpointthenthedampingisincreasedbysuccessivemultiplicationbyνuntilabetterpointisfoundwithanewdampingfactorofλ0νkforsomek.

Ifuseofthedampingfactorλ/νresultsinareductioninsquaredresidualthenthisistakenasthenewvalueofλ(andthenewoptimumlocationistakenasthatobtainedwiththisdampingfactor)andtheprocesscontinues;ifusingλ/νresultedinaworseresidual,butusingλresultedinabetterresidualthenλisleftunchangedandthenewoptimumistakenasthevalueobtainedwithλasdampingfactor.

[edit]Example

PoorFit

BetterFit

BestFit

Inthisexamplewetrytofitthefunctiony=acos(bX)+bsin(aX)usingtheLevenberg–MarquardtalgorithmimplementedinGNUOctaveastheleasqrfunction.The3graphsFig1,2,3showprogressivelybetterfittingfortheparametersa=100,b=102usedintheinitialcurve.OnlywhentheparametersinFig3arechosenclosesttotheoriginal,arethecurvesfittingexactly.ThisequationisanexampleofverysensitiveinitialconditionsfortheLevenberg–Marquardtalgorithm.Onereasonforthissensitivityistheexistenceofmultipleminima—thefunctioncos(βx)hasminimaatparametervalue

and

[edit]Notes

1.^ThealgorithmwasfirstpublishedbyKennethLevenberg,whileworkingattheFrankfordArmyArsenal.ItwasrediscoveredbyDonaldMarquardtwhoworkedasastatisticianatDuPontandindependentlybyGirard,WynnandMorrison.

[edit]Seealso

∙Trustregion

[edit]References

∙KennethLevenberg(1944)."AMethodfortheSolutionofCertainNon-LinearProblemsinLeastSquares".TheQuarterlyofAppliedMathematics2:

164–168.

∙A.Girard(1958).Rev.Opt37:

225,397.

∙C.G.Wynne(1959)."LensDesigningbyElectronicDigitalComputer:

I".Proc.Phys.Soc.London73(5):

777.doi:

10.1088/0370-1328/73/5/310.

∙JorjeJ.MoréandDanielC.Sorensen(1983)."ComputingaTrust-RegionStep".SIAMJ.Sci.Stat.Comput.(4):

553–572.

∙D.D.Morrison(1960).JetPropulsionLaboratorySeminarproceedings.

∙DonaldMarquardt(1963)."AnAlgorithmforLeast-SquaresEstimationofNonlinearParameters".SIAMJournalonAppliedMathematics11

(2):

431–441.doi:

10.1137/0111030.

∙PhilipE.GillandWalterMurray(1978).